Keras使用预网络学习,迁移学习,特征提取,数据增强 VGG16

利用迁移学习在小数据集上提升学习效果 #生活技巧# #学习技巧# #深度学习技巧#

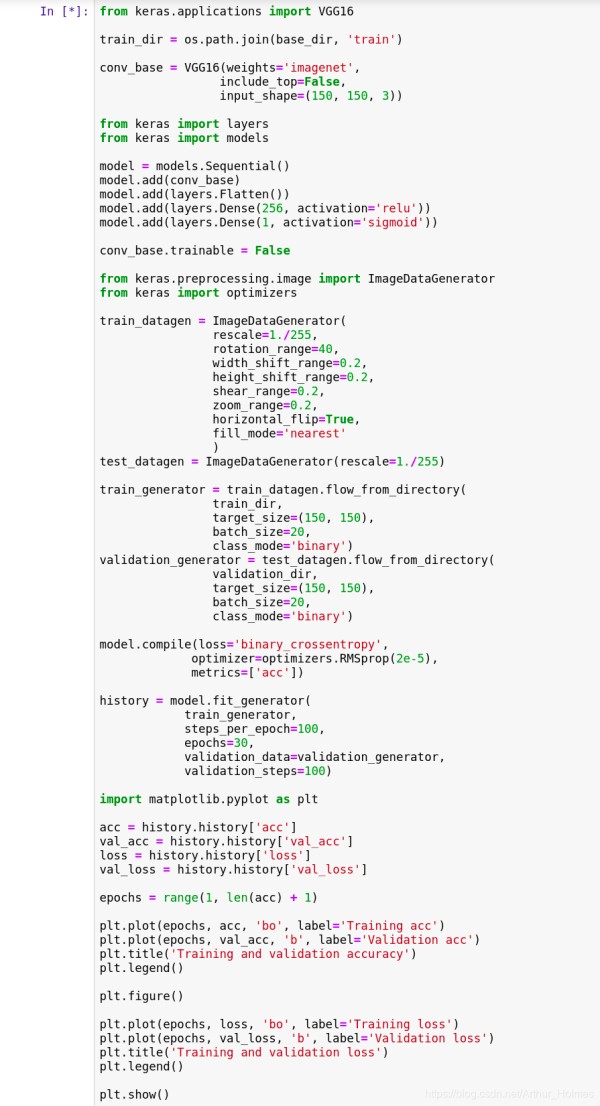

代码

from keras.applications import VGG16

train_dir = os.path.join(base_dir, 'train')

conv_base = VGG16(weights='imagenet',

include_top=False,

input_shape=(150, 150, 3))

from keras import layers

from keras import models

model = models.Sequential()

model.add(conv_base)

model.add(layers.Flatten())

model.add(layers.Dense(256, activation='relu'))

model.add(layers.Dense(1, activation='sigmoid'))

conv_base.trainable = False

from keras.preprocessing.image import ImageDataGenerator

from keras import optimizers

train_datagen = ImageDataGenerator(

rescale=1./255,

rotation_range=40,

width_shift_range=0.2,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True,

fill_mode='nearest'

)

test_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_datagen.flow_from_directory(

train_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary')

validation_generator = test_datagen.flow_from_directory(

validation_dir,

target_size=(150, 150),

batch_size=20,

class_mode='binary')

model.compile(loss='binary_crossentropy',

optimizer=optimizers.RMSprop(2e-5),

metrics=['acc'])

history = model.fit_generator(

train_generator,

steps_per_epoch=100,

epochs=30,

validation_data=validation_generator,

validation_steps=100)

import matplotlib.pyplot as plt

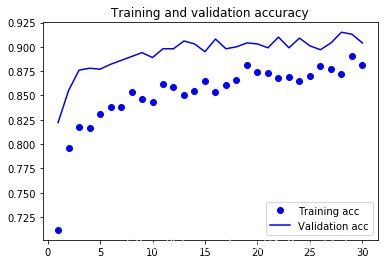

acc = history.history['acc']

val_acc = history.history['val_acc']

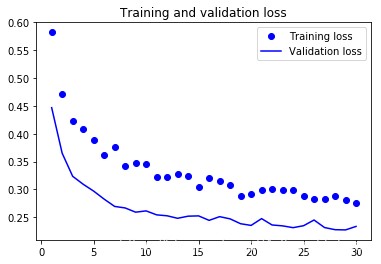

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(1, len(acc) + 1)

plt.plot(epochs, acc, 'bo', label='Training acc')

plt.plot(epochs, val_acc, 'b', label='Validation acc')

plt.title('Training and validation accuracy')

plt.legend()

plt.figure()

plt.plot(epochs, loss, 'bo', label='Training loss')

plt.plot(epochs, val_loss, 'b', label='Validation loss')

plt.title('Training and validation loss')

plt.legend()

plt.show()

Found 1998 images belonging to 2 classes. Found 1000 images belonging to 2 classes. Epoch 1/30 100/100 [==============================] - 63s 627ms/step - loss: 0.5820 - acc: 0.7119 - val_loss: 0.4467 - val_acc: 0.8220 Epoch 2/30 100/100 [==============================] - 62s 622ms/step - loss: 0.4714 - acc: 0.7957 - val_loss: 0.3649 - val_acc: 0.8550 Epoch 3/30 100/100 [==============================] - 63s 630ms/step - loss: 0.4236 - acc: 0.8173 - val_loss: 0.3235 - val_acc: 0.8760 Epoch 4/30 100/100 [==============================] - 63s 630ms/step - loss: 0.4076 - acc: 0.8164 - val_loss: 0.3093 - val_acc: 0.8780 Epoch 5/30 100/100 [==============================] - 63s 626ms/step - loss: 0.3893 - acc: 0.8309 - val_loss: 0.2969 - val_acc: 0.8770 Epoch 6/30 100/100 [==============================] - 62s 625ms/step - loss: 0.3626 - acc: 0.8383 - val_loss: 0.2825 - val_acc: 0.8820 Epoch 7/30 100/100 [==============================] - 62s 625ms/step - loss: 0.3753 - acc: 0.8383 - val_loss: 0.2694 - val_acc: 0.8860 Epoch 8/30 100/100 [==============================] - 63s 625ms/step - loss: 0.3414 - acc: 0.8533 - val_loss: 0.2667 - val_acc: 0.8900 Epoch 9/30 100/100 [==============================] - 62s 625ms/step - loss: 0.3469 - acc: 0.8458 - val_loss: 0.2591 - val_acc: 0.8940 Epoch 10/30 100/100 [==============================] - 63s 625ms/step - loss: 0.3455 - acc: 0.8428 - val_loss: 0.2614 - val_acc: 0.8890 Epoch 11/30 100/100 [==============================] - 63s 626ms/step - loss: 0.3221 - acc: 0.8619 - val_loss: 0.2543 - val_acc: 0.8980 Epoch 12/30 100/100 [==============================] - 63s 625ms/step - loss: 0.3227 - acc: 0.8584 - val_loss: 0.2526 - val_acc: 0.8980 Epoch 13/30 100/100 [==============================] - 63s 627ms/step - loss: 0.3280 - acc: 0.8504 - val_loss: 0.2481 - val_acc: 0.9060 Epoch 14/30 100/100 [==============================] - 63s 626ms/step - loss: 0.3243 - acc: 0.8543 - val_loss: 0.2519 - val_acc: 0.9030 Epoch 15/30 100/100 [==============================] - 63s 626ms/step - loss: 0.3035 - acc: 0.8649 - val_loss: 0.2525 - val_acc: 0.8950 Epoch 16/30 100/100 [==============================] - 63s 626ms/step - loss: 0.3204 - acc: 0.8534 - val_loss: 0.2444 - val_acc: 0.9080 Epoch 17/30 100/100 [==============================] - 62s 625ms/step - loss: 0.3148 - acc: 0.8604 - val_loss: 0.2511 - val_acc: 0.8980 Epoch 18/30 100/100 [==============================] - 62s 625ms/step - loss: 0.3078 - acc: 0.8663 - val_loss: 0.2471 - val_acc: 0.9000 Epoch 19/30 100/100 [==============================] - 62s 625ms/step - loss: 0.2878 - acc: 0.8814 - val_loss: 0.2384 - val_acc: 0.9040 Epoch 20/30 100/100 [==============================] - 63s 626ms/step - loss: 0.2925 - acc: 0.8739 - val_loss: 0.2353 - val_acc: 0.9030 Epoch 21/30 100/100 [==============================] - 63s 626ms/step - loss: 0.2980 - acc: 0.8729 - val_loss: 0.2475 - val_acc: 0.8990 Epoch 22/30 100/100 [==============================] - 63s 626ms/step - loss: 0.3013 - acc: 0.8684 - val_loss: 0.2361 - val_acc: 0.9100 Epoch 23/30 100/100 [==============================] - 63s 627ms/step - loss: 0.2995 - acc: 0.8694 - val_loss: 0.2345 - val_acc: 0.8990 Epoch 24/30 100/100 [==============================] - 63s 627ms/step - loss: 0.2985 - acc: 0.8654 - val_loss: 0.2313 - val_acc: 0.9090 Epoch 25/30 100/100 [==============================] - 63s 628ms/step - loss: 0.2883 - acc: 0.8704 - val_loss: 0.2348 - val_acc: 0.9010 Epoch 26/30 100/100 [==============================] - 63s 627ms/step - loss: 0.2829 - acc: 0.8798 - val_loss: 0.2451 - val_acc: 0.8970 Epoch 27/30 100/100 [==============================] - 63s 626ms/step - loss: 0.2825 - acc: 0.8772 - val_loss: 0.2315 - val_acc: 0.9040 Epoch 28/30 100/100 [==============================] - 63s 625ms/step - loss: 0.2875 - acc: 0.8724 - val_loss: 0.2277 - val_acc: 0.9150 Epoch 29/30 100/100 [==============================] - 63s 625ms/step - loss: 0.2819 - acc: 0.8903 - val_loss: 0.2273 - val_acc: 0.9130 Epoch 30/30 100/100 [==============================] - 63s 625ms/step - loss: 0.2756 - acc: 0.8808 - val_loss: 0.2335 - val_acc: 0.9040

网址:Keras使用预网络学习,迁移学习,特征提取,数据增强 VGG16 https://www.yuejiaxmz.com/news/view/219377

相关内容

【深度学习】深度学习语音识别算法的详细解析使用Python实现深度学习模型:智能家电控制与优化

【Matlab学习手记】BP神经网络数据预测

网络学习心得体会

Dropout技术全面解析——深度学习中的泛化能力提升策略

基于深度学习的可穿戴设备人体动作识别代码实战和实际运用随着科技的不断发展,可穿戴设备已经逐渐渗透到我们的日常生活中。在这

机器学习之数据预处理(Python 实现)

网络学习平台,在线学习平台哪个好?

提高大学生移动学习策略.doc

一文读懂!人工智能、机器学习、深度学习的区别与联系!